Go to documentation repository

Documentation for DetectorPack PSIM 1.0.1.

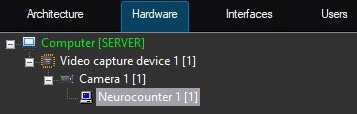

Configuration of the Neurocounter module includes: configuring the detection tool, selecting the area of interest. You can configure the Neurocounter module on the settings panel of the Neurocounter object created on the basis of the Camera object on the Hardware tab of the System settings dialog window.

Configuring the detection tool

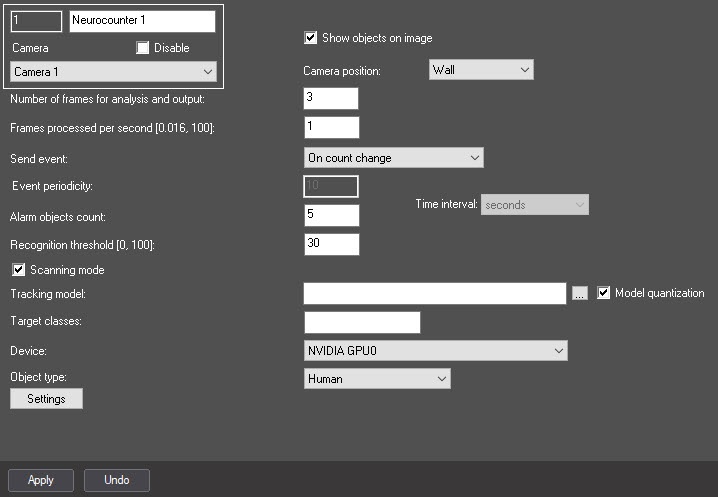

- Go to the settings panel of the Neurocounter object.

- Set the Show objects on image checkbox to frame the detected objects on the image in the debug window (see Start the debug window).

- From the Camera position drop-down list, select:

- Wall—objects are detected only if their lower part gets into the area of interest specified in the detection tool settings.

- Ceiling—objects are detected even if their lower part doesn't get into the area of interest specified in the detection tool settings.

- In the Number of frames for analysis and output field, specify the number of frames to be processed to determine the number of objects on them.

- In the Frames processed per second [0.016, 100] field, specify the number of frames processed per second by the neural network in the range from 0.016 to 100. For all other frames interpolation will be performed—finding intermediate values by the available discrete set of its known values. The greater the value of the parameter, the more accurate the detection tool operation, but the higher the load on the processor.

- From the Send event drop-down list, select the condition by which an event with the number of detected objects will be generated:

- If threshold exceeded is triggered if the number of detected objects in the image is greater than the value specified in the Alarm objects count field.

- If threshold not reached is triggered if the number of detected objects in the image is less than the value specified in the Alarm objects count field.

- On count change is triggered every time the number of detected objects changes.

- By period is triggered by a time period:

- In the Event periodicity field, specify the time after which the event with the number of detected objects will be generated.

- From the Time interval drop-down list, select the time unit of the counter period: seconds, minutes, hours, days.

- In the Alarm objects count field, specify the threshold number of detected objects in the area of interest. It is used in the If threshold exceeded and If threshold not reached conditions. The default value is 5.

In the Recognition threshold [0, 100] field, enter the neurocounter sensitivity—integer value from 0 to 100. The default value is 30.

Note

The neurocounter sensitivity is determined experimentally. The lower the sensitivity, the higher the probability of false alarms. The higher the sensitivity, the lower the probability of false alarms, however, some useful tracks can be skipped (see Example of configuring Neurocounter for solving typical task).

- Set the Scanning mode checkbox to detect small objects. If you enable this mode, the load on the system increases. So we recommend specifying a small number of frames processed per second in the Frames processed per second [0.016, 100] field. By default, the checkbox is clear. For more information on the scanning mode, see Configuring the Scanning mode.

- By default, the standard (default) neural network is initialized according to the object selected in the Object type drop-down list and the device selected in the Device drop-down list. The standard neural networks for different processor types are selected automatically. If you use a custom neural network, click the button to the right of the Tracking model field and in the standard Windows Explorer window, specify the path to the file.

Attention!

To train a neural network, contact the AxxonSoft technical support (see Data collection requirements for neural network training). A neural network trained for a specific scene allows you to detect objects of a certain type only (for example, a person, cyclist, motorcyclist, and so on).

- Set the Model quantization checkbox to enable model quantization. By default, the checkbox is clear. This parameter allows you to reduce the consumption of the GPU processing power.

Note

- AxxonSoft conducted a study in which a neural network model was trained to identify the characteristics of the detected object. The following results of the study were obtained: model quantization can lead to both an increase in the percentage of recognition and a decrease. This is due to the generalization of the mathematical model. The difference in detection ranges within ±1.5%, and the difference in object identification ranges within ±2%.

- Model quantization is only applicable for NVIDIA GPUs.

- The first launch of a detection tool with quantization enabled may take longer than a standard launch.

- If GPU caching is used, next time a detection tool with quantization will run without delay.

- If necessary, specify the class of the detected object in the Target classes field. If you want to display tracks of several classes, specify them separated by a comma with a space. For example, 1, 10.

The numerical values of classes for the embedded neural networks: 1—Human/Human (top view), 10—Vehicle.Note

- If you specify a class/classes from the neural network and a class/classes missing from the neural network, the tracks of a class/classes from the neural network will be displayed (Object type, Neural network file).

- If you specify a class/classes missing from the neural network, tracks won't be displayed.

- From the Device drop-down list, select the device on which the neural network will operate: CPU, one of NVIDIA GPUs, or one of Intel GPUs. Auto (default value)—the device is selected automatically: NVIDIA GPU gets the highest priority, followed by Intel GPU, then CPU.

Attention!

- We recommend using the GPU.

- It may take several minutes to launch the algorithm on NVIDIA GPU after you apply the settings. You can use caching to speed up future launches (see Optimizing the operation of neural analytics on GPU).

- From the Object type drop-down list, select the object type:

- Human—the camera is directed at a person at the angle of 100-160°.

- Human (top-down view)—the camera is directed at a person from above at a slight angle.

- Vehicle—the camera is directed at a vehicle at the angle of 100-160°;

- Person and vehicle (Nano)—person and vehicle recognition, small neural network size;

- Person and vehicle (Medium)—person and vehicle recognition, medium neural network size;

- Person and vehicle (Large)—person and vehicle recognition, large neural network size.

Note

Neural networks are named taking into account the objects they detect. The names can include the size of the neural network (Nano, Medium, Large), which indicates the amount of consumed resources. The larger the neural network, the higher the accuracy of object recognition.

Selecting the area of interest

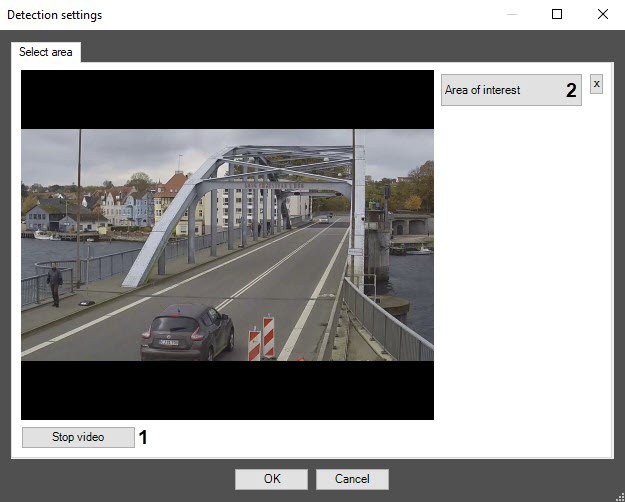

- Click the Settings button. The Detection settings window opens.

- Click the Stop video button (1) to pause the playback and capture the frame.

- Click the Area of interest button (2) to specify the area of interest. The button will be highlighted in blue.

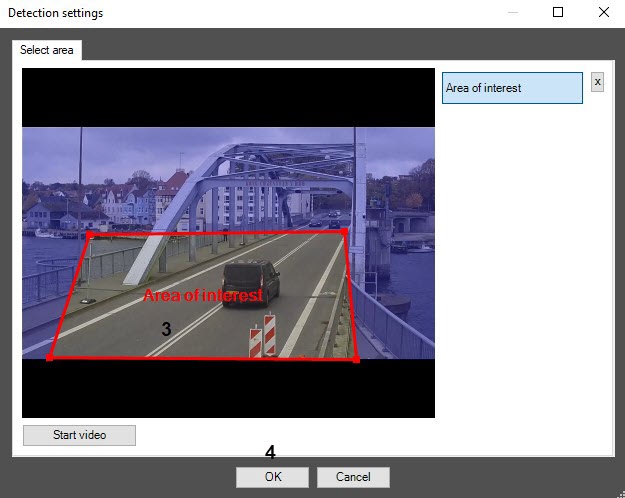

- On the captured frame, sequentially set the anchor points of the area in which the objects will be detected. The rest of the frame will be faded. If you don't specify the area of interest, the entire frame is analyzed.

Note

- You can add only one area of interest. If you try to add a second area, the first one will be deleted.

- To delete an area, click the button to the right of the Area of interest button.

- Click the OK button to close the Detection settings window and return to the settings panel of the Neurocounter object.

- Click the Apply button to save the changes.

Configuring the Neurocounter module is complete.